Ropes — Fast Strings

AlgorithmsMost of us work with strings one way or another. There’s no way to avoid them — when writing code, you’re doomed to concatinate strings every day, split them into parts and access certain characters by index. We are used to the fact that strings are fixed-length arrays of characters, which leads to certain limitations when working with them. For instance, we cannot quickly concatenate two strings. To do this, we will at first need to allocate the required amount of memory, and then copy there the data from the concatenated strings. This operation is obviously of О(n) complexity, in which n — total length of strings. That’s exactly why code

string s = "";

for (int i = 0; i < 100000; i++) s += "a";

is so slow.

Want to perform concatenation of enormous strings really fast? Don’t like that a string requires a contiguous memory locations? Are you sick of using buffers to build strings?

The data structure to come for our rescue is called a Rope string. Its principle of operation is really simple and we can guess it basing on intuitive considerations.

The Idea

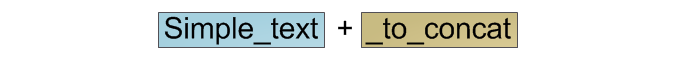

Let’s say we need to add two strings:

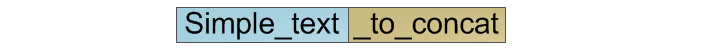

For classical strings, we will simply allocate some memory of the required size, copy the first string to its beginning, and the contents of the second one to its end:

As mentioned above, the complexity of this operation is O(n).

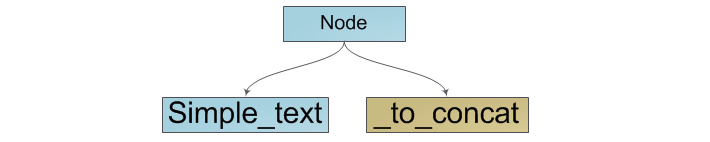

Knowing that our resulting string is the concatenation of the two initial ones, how can we use it? Really, let’s create an object that represents the string interface and contains information about its components — original strings:

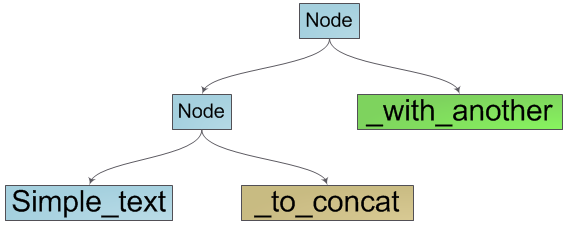

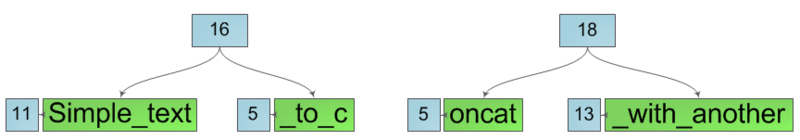

Such method of string concatenation works in О(1) — we should only create a wrapper object for original strings. Since this object is also a string, we can combine it with other strings for getting necessary concatenations:

It’s already clear that our structure is a binary search tree, the leaves of which contain elementary components of our string — character groups. What also becomes obvious is the way of listing string characters — it’s a tree traverse to the depth with characters listed sequentially in the leaves of the tree.

Indexing

Now, let’s implement the operation of obtaining a string character by its index. To do this, we will introduce an additional feature for tree nodes — weight. If a tree node stores a part of characters (node — leaf), its weight is equal to the number of these characters. Otherwise, a node weight is equal to the weight of its children. In other words, a node weight is the length of the string it represents.

We need to get the ith character of a string represented by Node node. Two cases may arise in this case:

- Node is a leaf. In this case, it contains the data itself, and it’s enough to return the i-th character of the “internal” string.

- Node is composite. Then it is necessary to find out what child of the node we should continue our search in. If the weight of the left child is greater than i — the desired character is the i-th character of the left substring. Otherwise, it is the (i-w)-th character of the right substring, where w is the weight of the left subtree.

After these calculations, the recursive variant of the algorithm (as well as iterative) becomes obvious.

So, we can concatenate strings in О(1) and index characters in them in О(h) — the height of the tree. But to be completely happy, we should learn how to quickly perform the operations of splitting into two strings, deleting and inserting a substring.

Split

So, we have a string, and we urgently need to break it into two substrings in some of its k position (numbers on the chart are sizes of corresponding trees):

The place of the “split” in a string is always in one of the tree leaves. Let’s split this leaf into two new ones that will contain substrings of the initial leaf. For this operation, we aren’t going to copy the leaf contents into new ones. We’ll simply introduce such leaf features as offset and length, and also save pointers to the character array of the initial one in new leaves, changing only the offset and length:

Then, we are going to split all nodes on the path from the leaf to the root, creating instead pairs of nodes that will belong to the left and right substring (that is being created) respectively. Besides, we do not change the current node but create two new ones instead of it. This means that the operation of splitting a string creates new substrings without affecting the initial one. After splitting the original string, we get two new strings, as shown below.

It is easy to notice that the internal structure of such strings is not optimal — some are obviously superfluous. However, it’s quite simple to fix this omission. It’s enough to loop through the substrings starting from the place of the split to the root, changing each node that has just one child with this very child. After this, all the unnecessary nodes will be gone, and we’ll obtain the required substrings in their final form:

The time complexity of the operation of splitting strings is obviously О(h).

Delete and Insert

Thanks to the already implemented operations of splitting and concatenating, we can easily perform insertion and deletion. To delete a substring, it’s enough to split the original string in place of the beginning and the end of the part to be removed, and concatenate the tails. For insertion, we split the original string into two substrings, and concatenate them in the correct order with the one to be inserted. Both operations are of О(h) asymptotics.

Optimization

Balancing

Hearing the word “tree”, a careful reader remembers two other ones: “logarithm” and “balancing”. To achieve the desired logarithmic asymptotics, we’ll have to balance our tree. Taking into account the current way of concatenating strings, the internal structure of the tree will look more like “stairs”, something like shown below:

To avoid this, we’ll check the balance of the result at every string concatenation, and rebuild the entire tree when necessary, balancing it. A good balance condition is when the string length not less than the (h+2)-th Fibonacci number. The rationale for this condition, as well as some additional modifications of the concatenation operation, have been provided by Hans-j. Boehm, Russ Atkinson and Michael Plass in their work «Ropes: an Alternative to Strings».

Direct Concatenation of Small Strings

Storage in memory of tree nodes is not free at all. Adding one character to a string, we spend much more memory on storing information about tree nodes than on string characters. To avoid such situations, and also to reduce the height of the tree, it is reasonable to concatenate strings of less than a certain length (eg, 32) in a “classic” way. This will greatly save memory and have almost no impact on performance.

Caching the Last Position

In the majority of cases, we iterate string characters sequentially. In our case, when we access characters by i and i+1 indices, there’s a very good chance that they’re in the same leaf of the tree. This means that when searching for these characters, we are going to repeat the same path from the root of the tree to the leaf. Caching is the obvious solution to this problem. When searching for another character, we memorize the leaf it is stored in, as well as the range of indices the leaf contains. After that, when searching for another character, we will at first check whether the index is within the memorized range. If so, we will look for it directly in the memorized leaf. We can even go further and memorize not only the last position but several, eg in a cyclic list.

Together with the previous optimization, such technique will allow us to improve the asymptotic behavior of the indexing from O(ln(n)) almost to О(1).

Summary

What will we get as a result? We’ll get a persistent implementation of a string that does not require continuous memory area for its storage, with the logarithmic asymptotics of insertion, deletion, and concatenation (instead of O(n) in classic strings) and indexing almost in О(1) — the result is quite noticeable.

Finally, I’m providing a couple of useful links: Wikipedia, implementation in Java and description of С++ implementation on SGI.

Use the ropes, Luke!

Comments

Dale King