YAWNDB. Time Series Database Written in Erlang

ErlangI work at Selectel and a lot of our clients, who are leasing dedicated servers, require the information about traffic consumption. Cloud server users need statistics of hardware and network resources use. As for cloud storage users, they need downloads statistics.

The simplest way to provide statistic data is plotting. There are plenty of software solutions for analyzing statistic data with further visualization. We have been looking for an appropriate tool with high performance as a basic requirement. But let’s begin with some theoretical information first.

Some Theory

Each chart of online activities reflects changes of some parameters within defined period of time (a month, a week, a day, etc.). To create a chart we should process some statistic material representing a set of “time-value” pairs in a defined time period. Such material is called time-series.

There are quite many software tools for analyzing time series. They have one common feature – Round Robin database (RRD) use. Round database stores the amount of data that remain constant over time.

Round Robin database stores one or several data sets combined into Round Robin Archives (RRA). Round charts are similar to arrays in their structure, where the address of the last element corresponds to the address of the first one. The archives are connected in a way that each successive archive keeps the information from the previous one: one archive stores the data with a small interval between records, the other one stores consolidated data from the previous one in a specified number of intervals. The third one does it even rarer, and so on.

There are built-in consolidation functions used for these purposes and they are applied automatically when information is updated. By consolidating functions I mean getting a minimum, a maximum, an average and a common value within a specified time frame. Data consolidation in RRD happens during writing, not reading (it leads to a higher performance).

RRDtool

The most popular and widespread tool for analyzing time series and further visualization is RRDtool.

We’ve tried it out in our own practice, but the experience was not satisfying. There have been a lot of reasons for that, but the major one is that it could not handle high loads.

We faced with a lot of problems when the number of files to write the data to has exceeded a thousand. For example, writing this data took too much time. And sometimes we just couldn’t write the data at all, though there were no errors or crashes.

The provided above facts are enough to conclude that RRDtool does not fit our needs: with such a big number of our virtual machines in the cloud, it has to execute tens of thousands of writes per second.

In order to work with big data there is a way to reduce a number of write operations with the help of RRDcacheD daemon. It will cache the data and when it reaches some specified amount, write it to database. But in our practice, RRDcacheD is not either good for such tasks.

Accumulating the data, it does not allow reading it from a cache. It just writes the data to disk. To process the data, we will have to write everything to disk and then read it all from there. The bigger data amount, the worse cache operates. Since the hard disk is loaded, there’s also additional load on processor…

Another RRDcacheD peculiarity is that it writes data to disk at the most unexpected and inappropriate moment.

Using RRDtool, it is also impossible to change RRD settings. Of course, we can do that by exporting the data, creating a file with new parameters and further importing the old data to it. But it is too complicated.

Having faced these problems, we have decided not to use RRDtool. We have also tried other tools for data processing and visualization, such as graphite. But its performance was too low.

Since we haven’t found the perfect tool, it was decided to develop a solution to meet all of the requirements. It should be flexible, adjustable and adaptive to certain service specificities. Its performance should be definitely high. That is how YAWNDB appeared.

YAWNDB: General Info

YAWNDB (YAWN acronym means Yet Another iNvented Wheel) is an in-memory circular array database written in Erlang. All the data is stored in memory and periodically written to disk. It is based upon the lightweight process model allowing to process large amounts of data without consuming extra system resources.

All the data is dispersed and put into the archives (in YAWNDB terminology they are called buckets) according to certain rules.

A rule is a set of properties for a set of statistical data (i.e. data size, data collection frequency, etc.)

All the data is represented as triplets composed of a time, value and key. A key (in YAWNDB terminology it is also called path) is a sequence of alphanumeric characters defining where the triplet will be stored.

One of the most important part of the sequence is first component — prefix. A rule includes Prefix field and thus defines the way the data will be stored for a particular prefix. There can be several rules for the same prefix. In this case the triplet will be written to database according to each of the rules.

It means that a certain “time-value” pair will be put into N buckets, where N is the number of rules corresponding to path prefix for the given pair.

This approach allows to gain the following benefits:

- the obsolete data is deleted without excessive consumption of resources;

- fixed memory usage for a fixed number of keys;

- fixed access time for any records.

Architecture

YAWNDB is based on a circular array algorithm implemented in Ecirca library written in C.

YAWNDB modules written in Erlang interact with Ecirca via native implemented functions (NIF). Data retention is provided by Bitcask application written in Erlang.

The REST API is based on Cowboy web server. The data is written via the socket and read via the REST API interface.

Basic Ideas

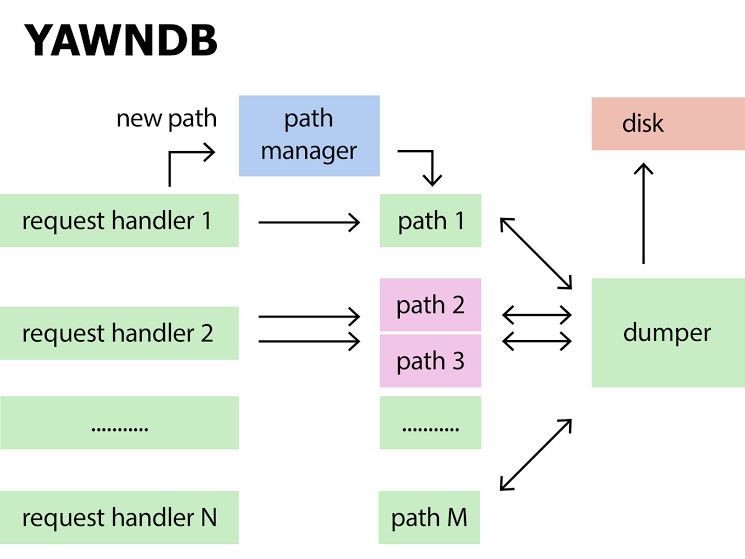

There’s a separate path N process for each path. This process stores the data itself and some service information. Each incoming request is processed by an available request handler N (if there’s no available handler, YAWNDB creates one). Obtained parameters are passed to the path manager, which extracts the required data from appropriate processes.

dumper process is in charge of saving to disk. It polls all the path processes and saves the data that has been changed after the last retention.

Installation

To begin your work with YAWNDB, install LibYAML parser on client machine first. Then clone the repository:

$ git clone [email protected]:selectel/yawndb.git And execute the following commands:

$ cd yawndb

$ make all

Run YAWNDB with:

$ ./start.sh Before launching you should copy the configuration file:

$ cp priv/yawndb.yml.example priv/yawndb.yml or create an appropriate symlink.

Configuration

All YAWNDB settings are kept in the configuration file yawndb.yml.

Let’s consider the following configuration file as an example. It will be used for processing the statistics in the cloud storage:

rules:

# User statistics

# statistics per minutes (24 hours)

- name: per_min

prefix: clientstats

type: sum

timeframe: 60

limit: 1440

split, backward

value_size, large

additional_values: []

# statistics per hours (the last month)

- name: per_hour

prefix: clientstats

type: sum

timeframe: 3600

limit: 720

split: backward

value_size: large

additional_values: []

# statistics per days (the last two years)

- name: per_day

prefix: clientstats

type: sum

timeframe: 86400

limit: 730

split: backward

value_size: large

additional_values: []

Example Use

Building a Packet for Writing (Python)

def encode_yawndb_packet(is_special, path, time, value):

"""

Build a packet to send to yawndb.

:param bool is_special: special value? Used for additional_values

:param str path: metric identifier

:param int value: metric value

"""

is_special_int = 1 if is_special else 0

pck_tail = struct.pack(

">BBQQ",

YAWNDB_PROTOCOL_VERSION, is_special_int, time, value

) + path

pck_head = struct.pack(">H", len(pck_tail))

return pck_head + pck_tail

Build a Packet for Writing in C

// define the protocol version

#define YAWNDB_PROTOCOL_VERSION 3

// describe the package structure

struct yawndb_packet_struct {

uint16_t length;

uint8_t version;

int8_t isSpecial;

uint64_t timestamp;

uint64_t value;

char path[];

};

// build a packet

yawndb_packet_struct *encode_yawndb_packet(int8_t isSpecial,

uint64_t timestamp, uint64_t value, const char * path) {

yawndb_packet_struct *packet;

uint16_t length;

lenght = sizeof(uint8_t) + sizeof(int8_t) + sizeof(uint64_t) + sizeof(uint64_t) + strlen(path);

packet = malloc(length + sizeof(uint16_t));

packet->length = htobe16(length);

packet->version = YAWNDB_PROTOCOL_VERSION;

packet->isSpecial = isSpecial;

packet->timestamp = htobe64(timestamp);

packet->value = htobe64(value);

strncpy(packet->path, path, strlen(path));

return packet;

}

Summary

YAWNDB is used in public services, as well as in internal projects. You will find the project source code at GitHub. You are most welcome to use this product and share your feedback.

Ropes — Fast Strings

Comments